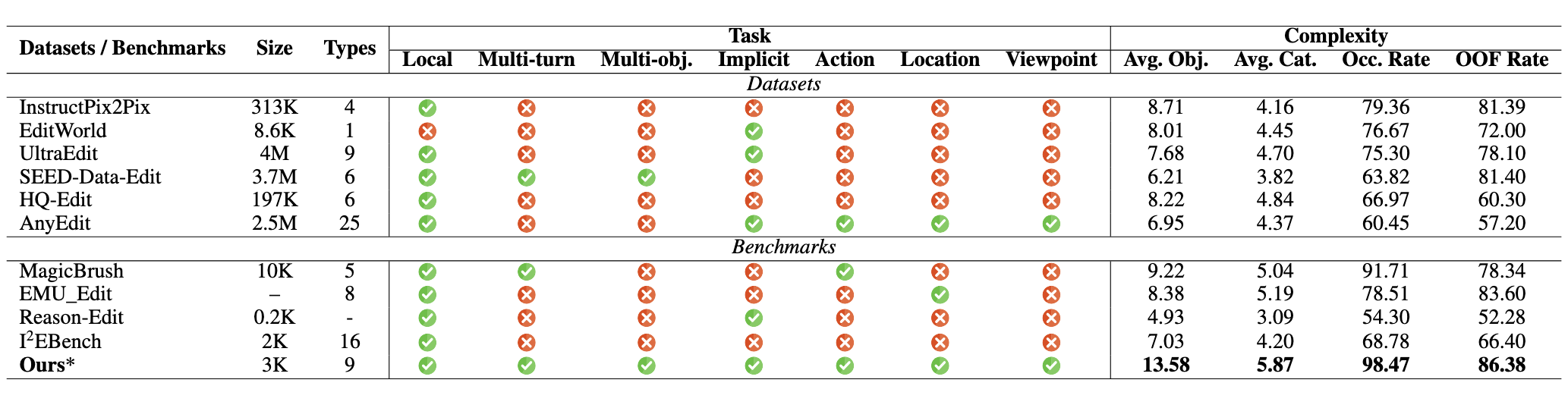

While real-world applications increasingly demand intricate scene manipulation, existing instruction-guided image editing benchmarks often oversimplify task complexity and instruction comprehensiveness. To address this gap, we introduce Comp-Edit, a large-scale benchmark specifically designed for complex instruction-guided image editing. Our Comp-Edit contains complicated tasks requiring fine-grained instruction-following, spatial-contextual reasoning and precise editing capabilities of image editing model. Meanwhile, To better align instructions with complex editing requirements, we propose an instruction decoupling method that disentangles editing intents into four dimensions: location (spatial constraints), appearance (visual attributes), dynamics (temporal interactions), and objects (entity relationships). Through extensive evaluations, we demonstrate that Comp-Edit exposes fundamental limitations in current methods, which offers a critical tool for advancing next-generation image editing models.

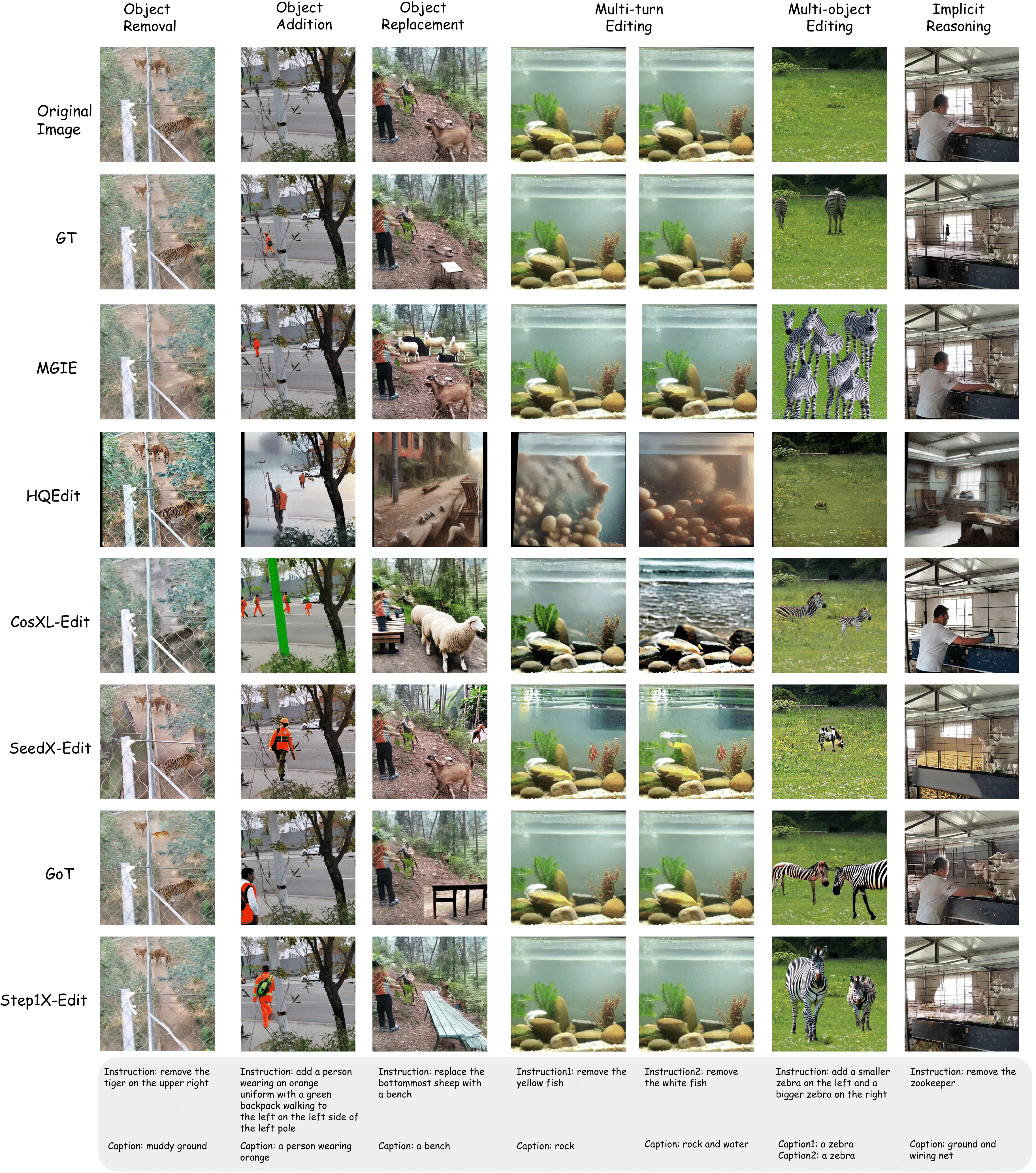

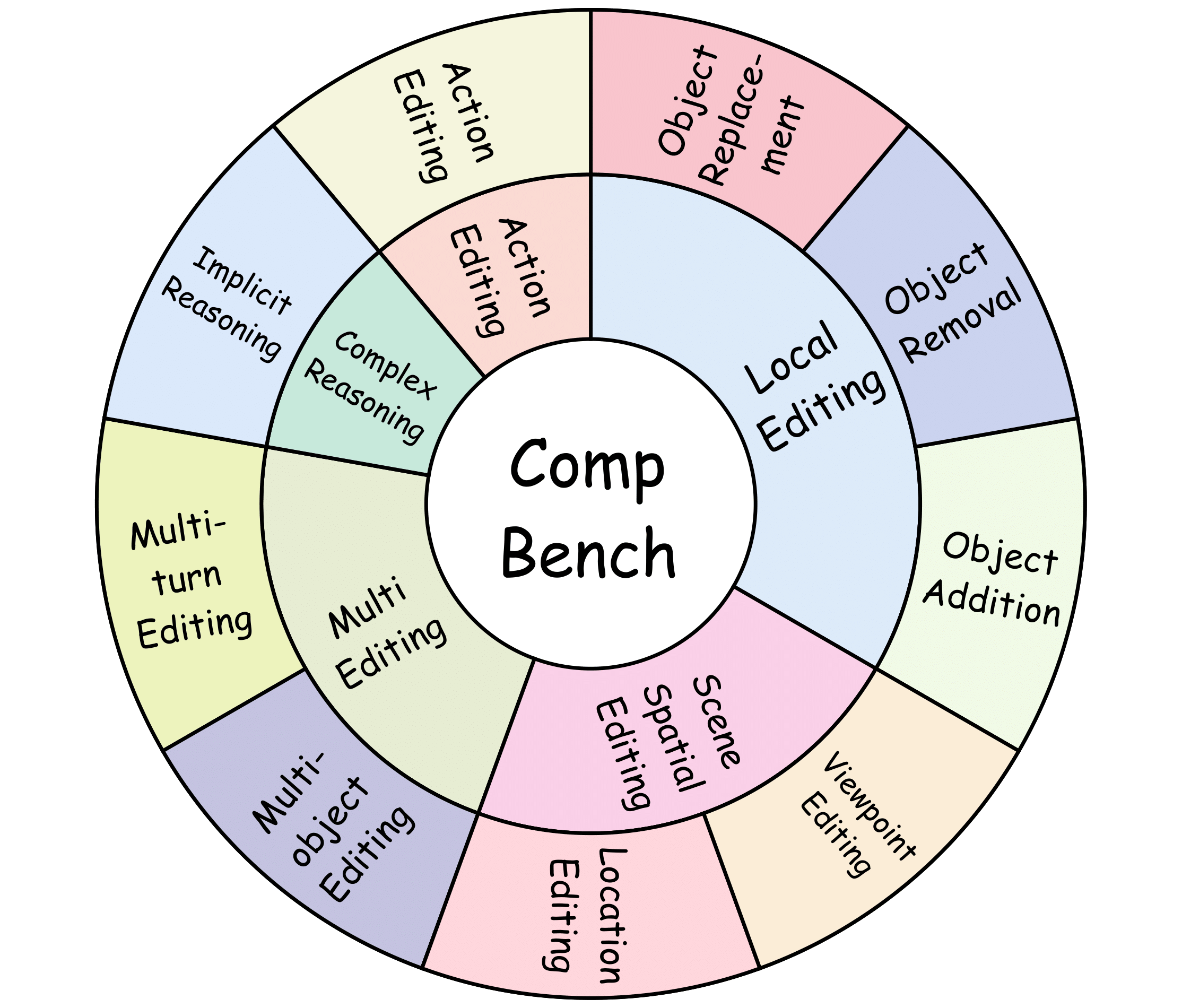

Our complex instruction-guided image editing benchmark, CompBench, contains 3k+ image-instruction pairs. To enhance the comprehensiveness of evaluation, we categorize editing tasks into five major classes with specific tasks based on their characteristics:(1) Local Editing: focuses on manipulating local objects, including object removal, object addition and object replacement. (2) Multi-editing: addresses interactions among multiple objects or editing steps, including multi-turn editing and multi-object editing. (3) Action Editing: modifies the dynamic states or interactions of objects. (4) Scene Spatial Editing: alters scene spatial properties, consisting of location editing and viewpoint editing. (5) Complex Reasoning: requires implicit logical reasoning, including implicit reasoning.

Every sample in CompBench is meticulously constructed through multiple rounds of expert review, ensuring the highest quality of edits. Unlike other benchmarks where editing failures are common, all data in CompBench represent successfully executed editing results, with SSIM (Structural Similarity Index Measure) scores significantly outperforming those of other datasets. This rigorous quality control ensures that CompBench provides a reliable assessment of model performance in realistically complex editing scenarios.

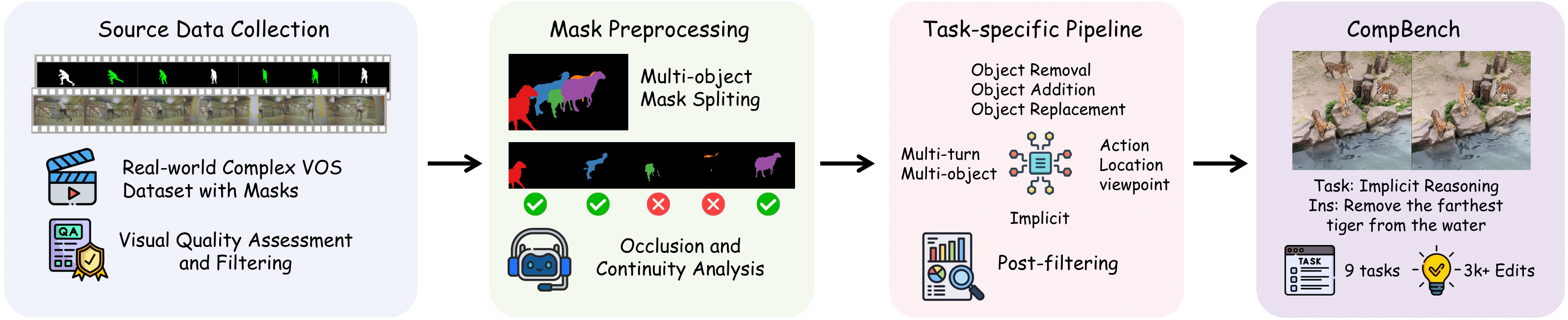

The pipeline consists of two main stages: (a) Source data collection and preprocessing, wherein high-quality data are identified through image quality filtering, mask decomposition, occlusion and continuity evaluation, followed by thorough human verification. (b) Task-specific data generation using four specialized pipelines within our MLLM-Human Collaborative Framework, where multimodal large language models generate initial editing instructions that are subsequently validated by humans to ensure high-fidelity, semantically aligned instruction-image pairs for complex editing tasks.

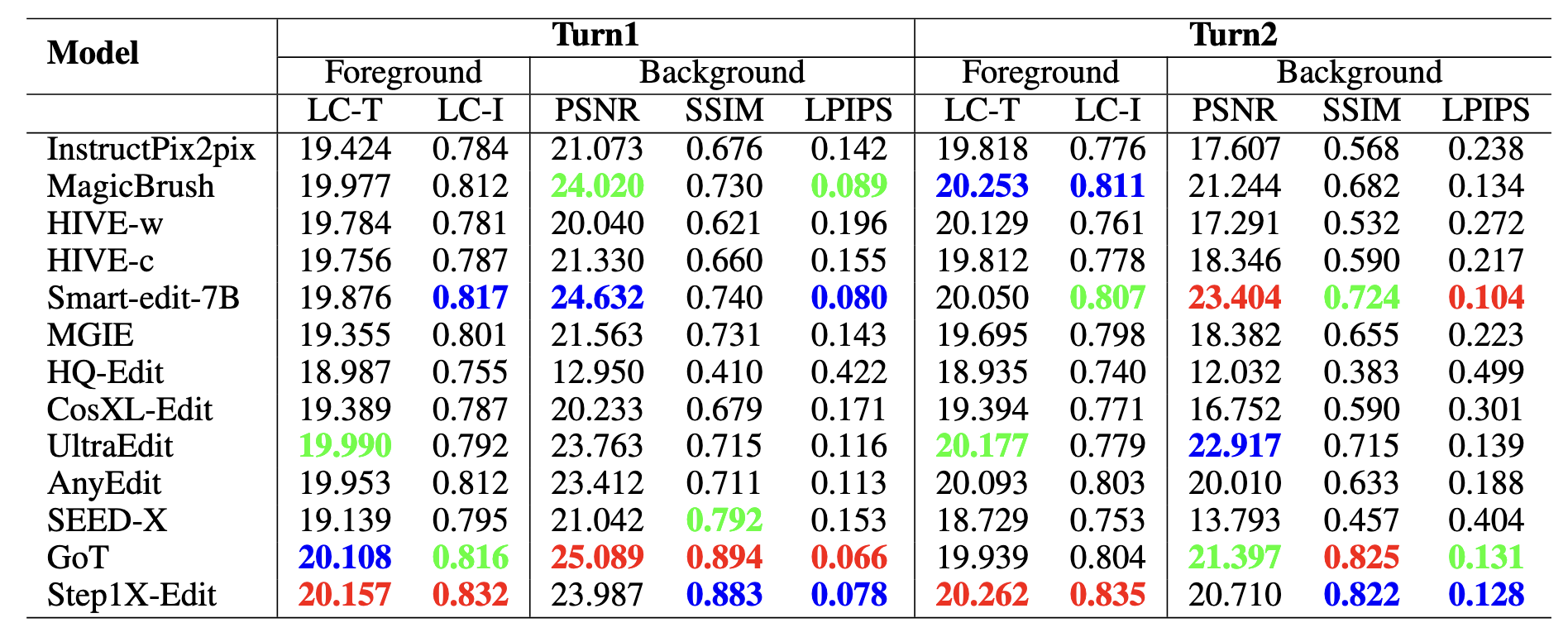

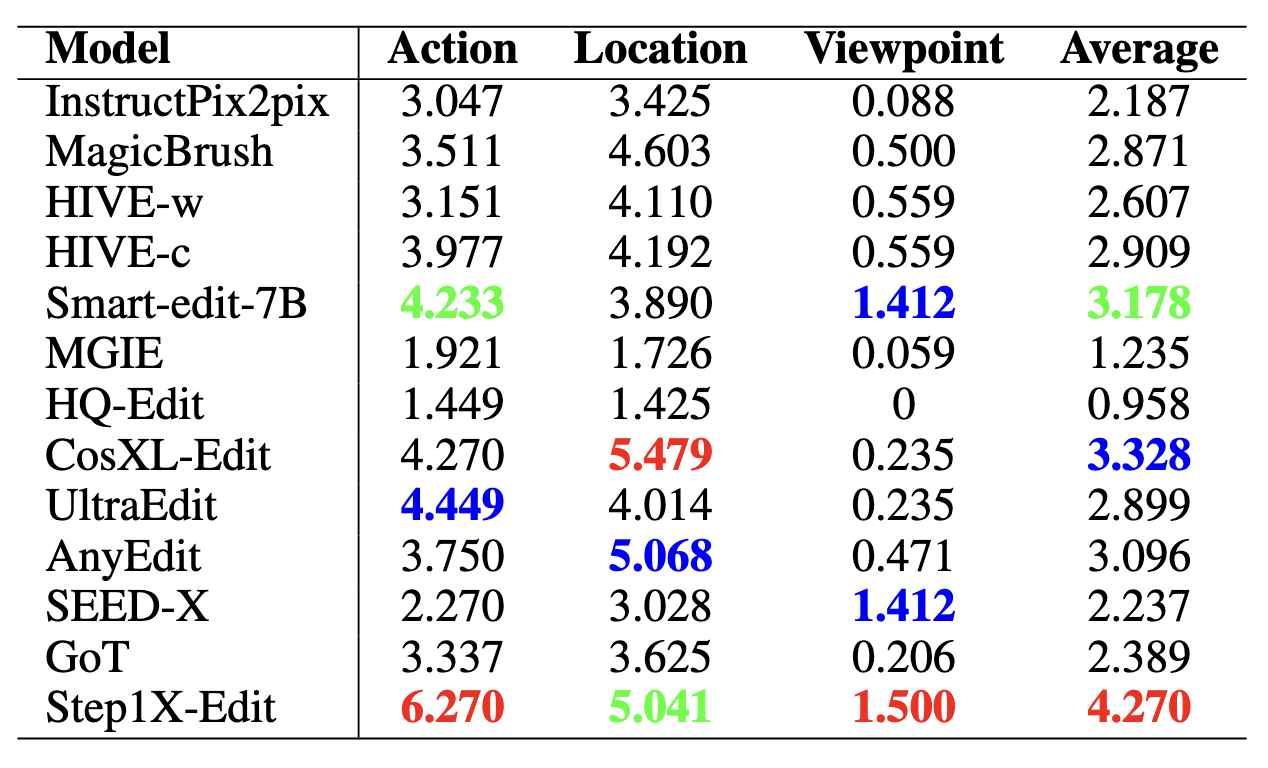

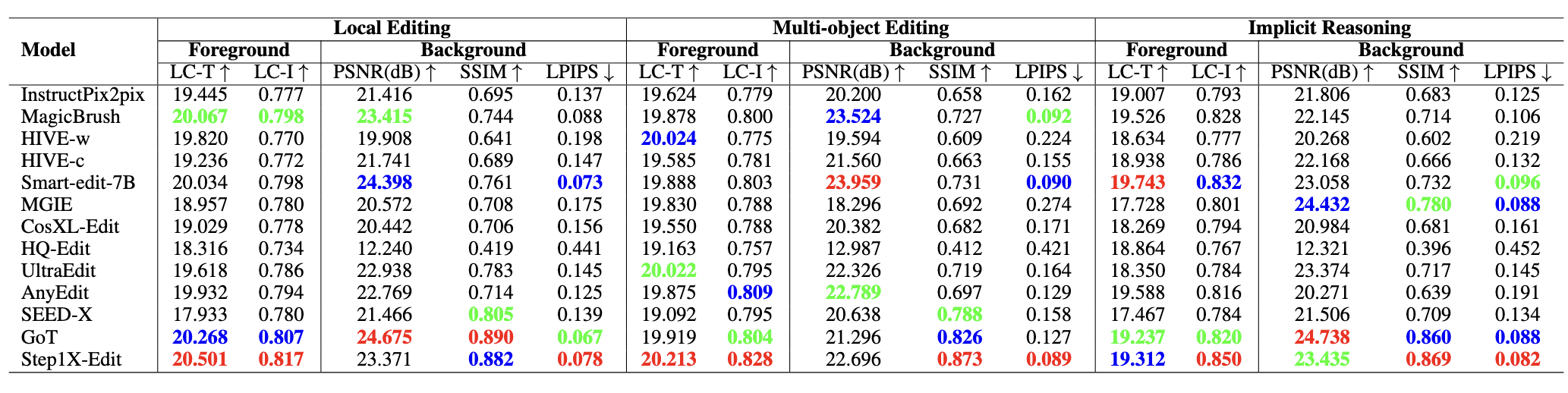

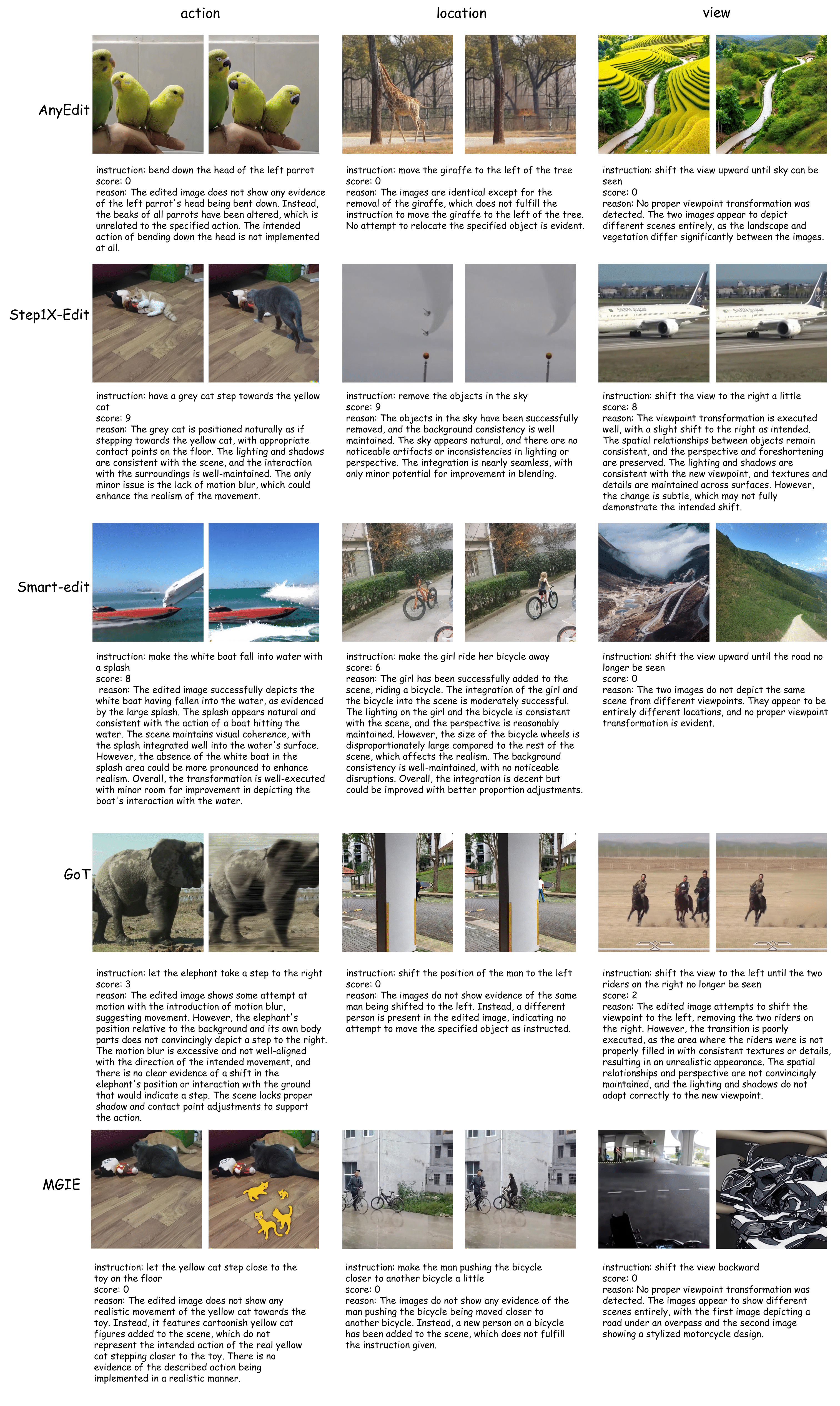

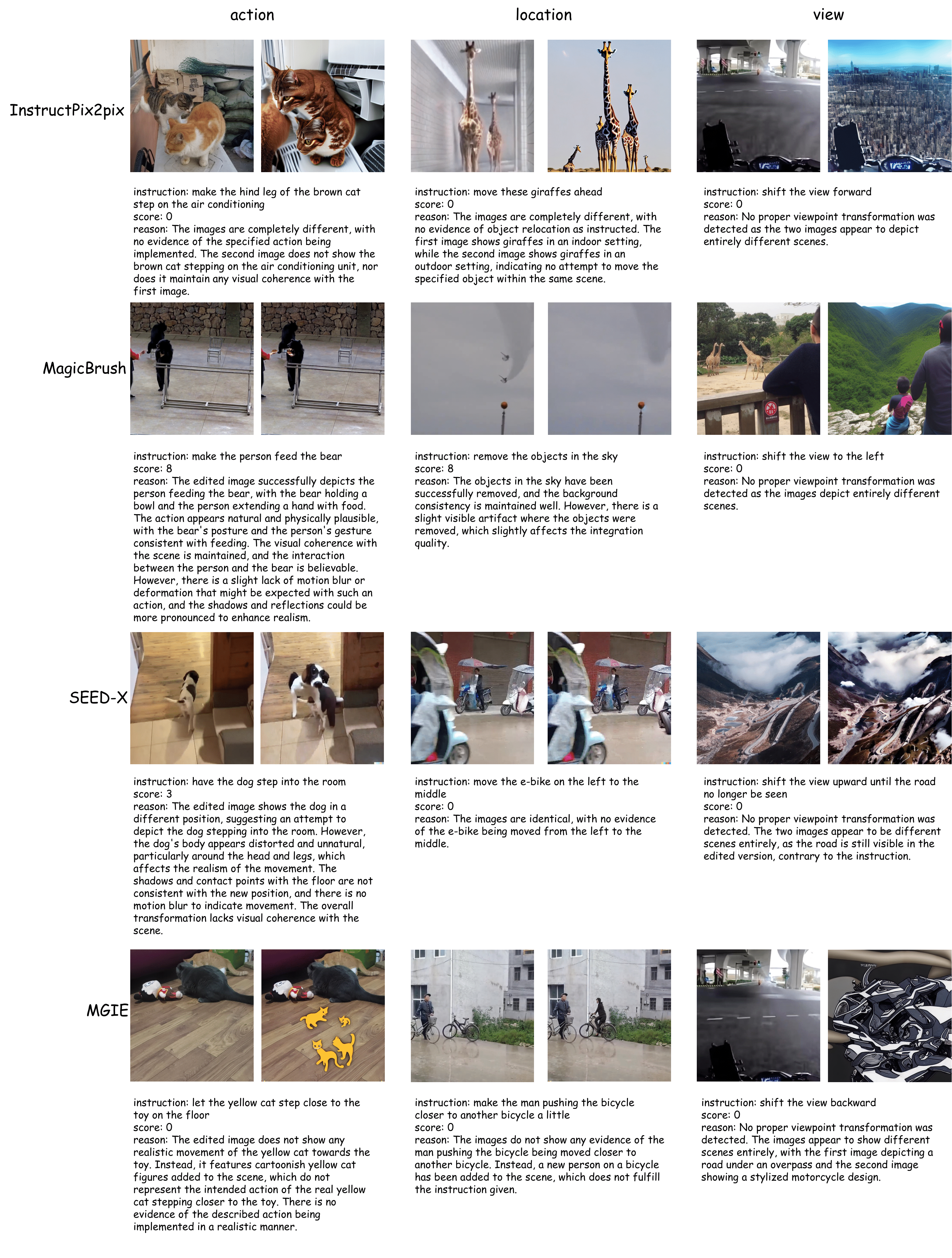

We evaluate instruction-guided image editing models only, including InstructPix2pix, MagicBrush, HIVE, MGIE, and others. Evaluation covers foreground accuracy and background consistency, using PSNR, SSIM, LPIPS, and CLIP-based metrics. For tasks with major object changes (e.g., action or viewpoint), we use GPT-4o with tailored prompts to score edits from 0 to 10.

LC-T denotes local CLIP scores between the edited foreground and the local description. LC-I refers to the CLIP image similarity between the foreground edited result and ground truth (GT) image. Top-three evaluation results are highlighted in (1st), blue(2nd), and green (3rd).